How to Remove URLs from Google Search in a Snap!

While most SEOs focus on achieving the highest rankings, sometimes the exact opposite is needed — removing URLs from Google.

For instance when you're battling outdated or duplicate content, an indexed staging environment, or indexed pages that contain sensitive personal data.

Whatever the situation is, with this guide you'll be able to quickly remove the URLs from Google!

Introduction

While many SEOs are mainly focused on getting their content indexed quickly by Google, the very opposite – getting it removed quickly – is frequently needed too.

Maybe your entire staging environment got indexed, sensitive content that never should have been accessible to Google got indexed, or spam pages added as a result of your website getting hacked are surfacing in Google – whatever it is, you’ll want those URLs to be removed quickly, right?

In this guide, we’ll explain exactly how to achieve that.

Here are the most common situations where you need to remove URLs from Google quickly:

- You’re dealing with duplicate or outdated content

- Your staging environment has been indexed

- Your site has been hacked and contains spam pages

- Sensitive content that has accidentally been indexed

In this article, we’ll take a detailed look at all of these situations and how to get these URLs removed as soon as possible.

How to remove URLs with duplicate or outdated content

Having duplicate or outdated content on your website is arguably the most common reason for removing URLs from Google.

Most outdated content holds no value for your visitors, but it can still hold value from an SEO point of view. Meanwhile, duplicate contentDuplicate Content

Duplicate content refers to several websites with the same or very similar content.

Learn more can significantly hurt your SEO performance, as Google could be confused about what URL to index, and rank.

The particular actions you need to take to remove these URLs from Google depends on the context of the pages you want to get removed, as we'll explain below.

When content needs to remain accessible to visitors

Sometimes URLs need to remain accessible to visitors, but you don’t want Google to index them, because they could actually hurt your SEO. This applies to duplicate content for instance.

Let's take an example:

You run an online store, and you’re offering t-shirts that are exactly the same except for their different colors and sizes. The product pages don’t have unique product descriptions; they just each have a different name and image.

In this case, Google may consider the content of their product pages to be near-duplicate.

Having near-duplicate pages leads to Google both having to decide which URL to choose as the canonical one to index and spending your precious crawl budget on pages that don’t add any SEO value.

In this situation, you have to signal to Google which URLs need to be indexed, and which need to be removed from the index. Your best course of action for a URL depends on these factors:

- The URL has value: if the URL is receiving organic traffic and/or incoming links from other sites, you should canonicalize them to this preferred URL that you want to have indexed. Google will then assign its value to the preferred URL, while the other URLs still remain accessible to your visitors.

- The URL has no value: if the URL isn’t receiving organic traffic and doesn’t have incoming links from other sites, just implement the

noindexrobots tag. This sends Google a clear message not to index the URL, resulting in them not showing it on the search engine results pages (SERP). It's important to understand that in this case, Google won’t consolidate any value.

Having lots of low quality, thin or duplicate content can negatively impact your SEO efforts. If you have duplicate content issues, you don't necessarily need to remove the offending pages, you can canonicalize these pages instead if they're needed for other reasons. You could also merge the duplicated pages to create a stronger, more high quality piece of content. I recently purged content on a website and saw a 32% increase in organic traffic for the entire website.

If you want to avoid duplicate content issues on product variants, it's essential to build a solid SEO strategy and be ready to adapt if you see the need for change.

Suppose your catalog consists solely of simple (child) products where each product represents a specific variation. In that case, you will surely want to index them all, even though the differences between product variations aren't significant. Still, you will need to closely monitor their performance and, if any duplicate content issues emerge, introduce parent products to your online store. Once you start showing parent products on the frontend, you need to adjust your indexing strategy.

When you have both parent and child products visible on the frontend as separate items, I strongly suggest implementing the same rel canonical on all products to avoid duplicate content issues. In these circumstances, the preferred version should be a parent product that serves as a collection of all product variants. This change will not only improve your store's SEO, but it will also give a significant boost to its UX performance since your customers will be able to find their desired product variant more easily.

All of his, of course, refers only to products with the same or very similar content. If you have unique content on all product pages, each page should have a self-referencing canonical URL.

When content shouldn’t remain accessible to visitors

If there's outdated content on your website that no one should see, there are two possible ways to handle it, depending on the context of the URLs:

- If the URLs have traffic and/or links: implement 301 redirects to the most relevant URLs on your website. Avoid redirecting to irrelevant URLs, as Google might consider these to be soft-404 errors. This would lead to Google not assigning any value to the redirect target.

- If the URLs don’t have any traffic and/or links: return the HTTP 410 status code, telling Google that the URLs were permanently removed. Google's usually very quick to remove the URLs from its index when you use the 410 status code.

Once you've implemented the redirects, still submit the old sitemap to Google Search Console as well as the new one and leave it there for 3-4 months. This way, Google will pick up the redirects quickly and the new URLs will begin to show in the SERPs.

Remove cached URLs with Google Search Console

Google usually keeps a cached copy of your pages which they make take quite long to update, or to remove. If you want to prevent visitors from seeing the cached copy of the page, use the "Clear Cache URL" feature in Google Search ConsoleGoogle Search Console

The Google Search Console is a free web analysis tool offered by Google.

Learn more.

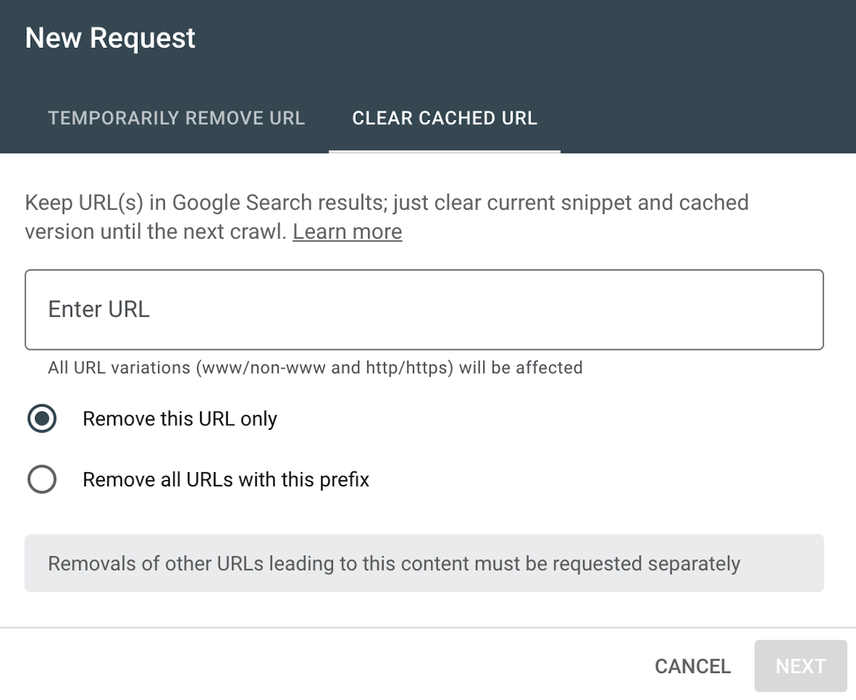

How to clear cached URLs using Google Search Console

- Sign in to your Google Search Console account.

- Select the right property.

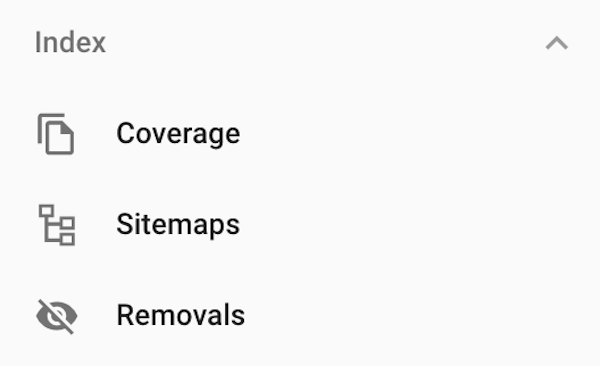

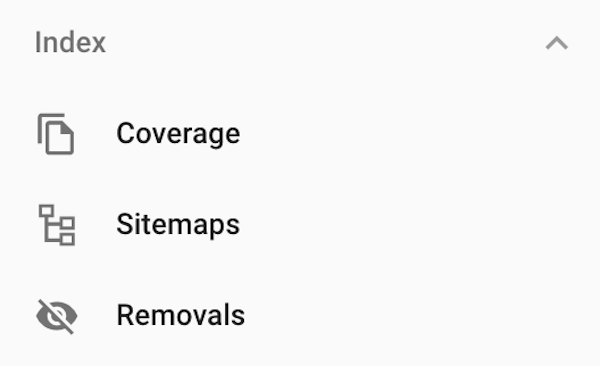

- Click the

Removalsbutton in the left-column menu.

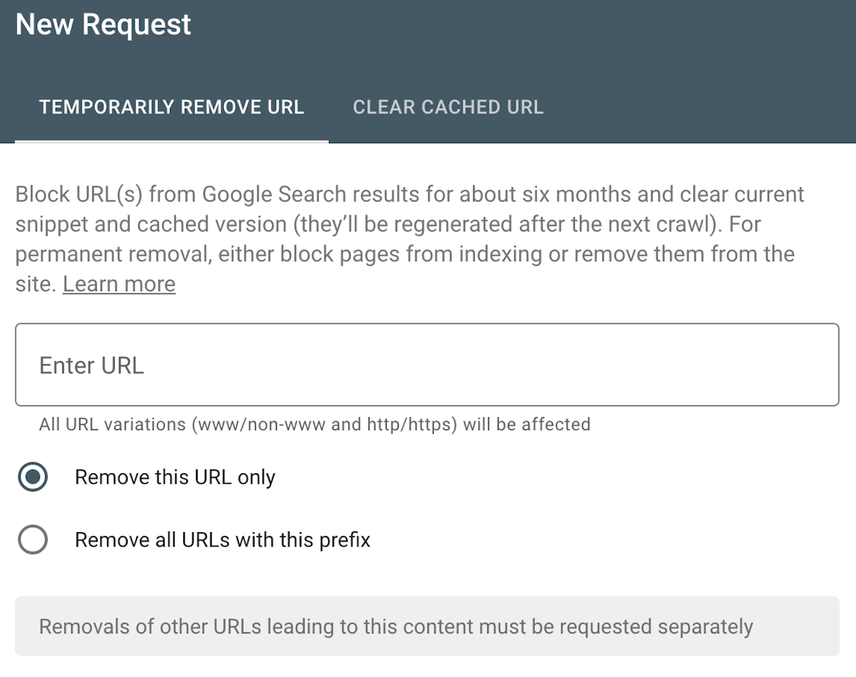

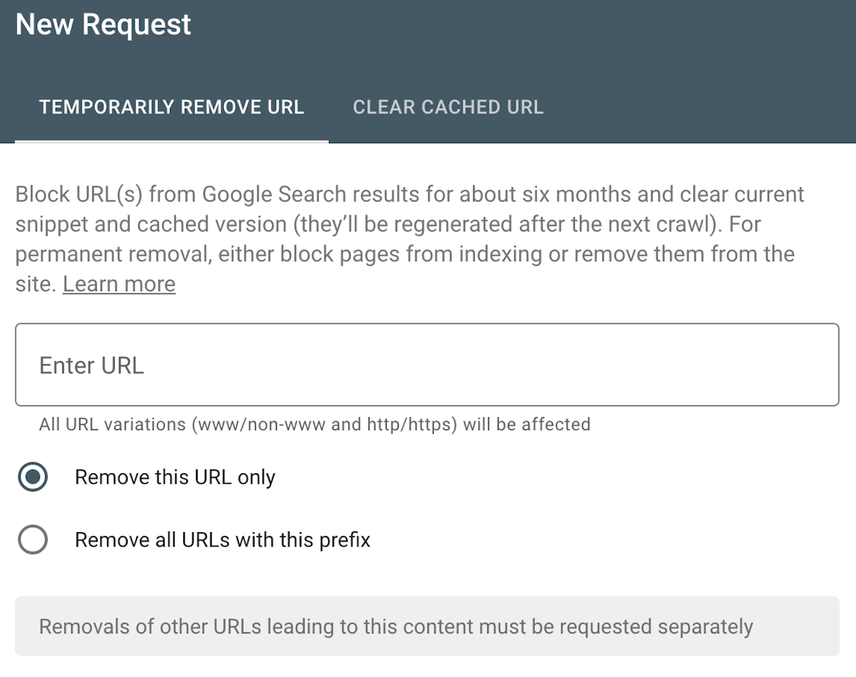

4. Click the NEW REQUEST button.

5. Switch to the CLEAR CACHED URL tab.

6. Choose whether you want Google to remove the cache for just one URL, or for all URLs that start with a certain prefix.

7. Enter the URL, and hit Next.

Please note that you can instruct Google not to keep cached copies of your pages by using the noarchive meta robots tag.

How to remove staging environment URLs

Staging and acceptance environments are used for testing releases and approving them. These environments are not meant to be accessible and indexable for search engines, but they often mistakenly are – and then you end up with staging-environment URLs (“staging URLs” from here on out) that have been indexed by Google.

It happens, live and learn.

In this section we’ll explain how to quickly and effectively get those pesky staging URLs out of Google!

When staging URLs aren’t outranking production URLs

In most cases, your staging URLs won't outrank production URLs. If this is the case for you too, just follow the steps to remedy this issue. Otherwise, skip to the next section.

- Sign in to your Google Search Console account.

- Select the staging property (or verify it if you haven't already).

- Click the

Removalsbutton in the right-column menu.

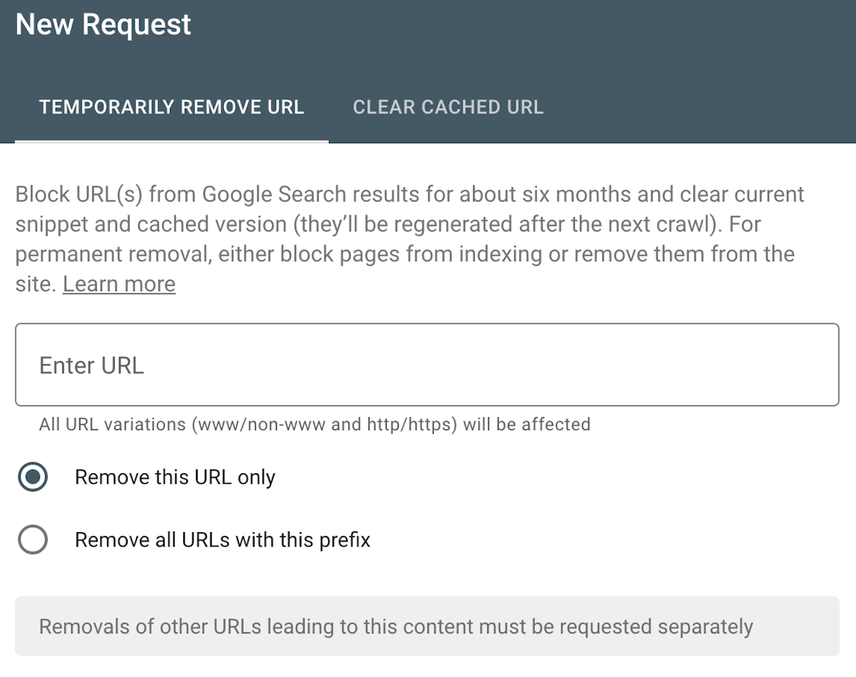

4. Click the NEW REQUEST button, and you'll land on the TEMPORARILY REMOVE URL tab:

5. Choose Remove all URLs with this prefix, enter the URL / and hit the Next button. Google will now keep the URLs hidden for 180 days, but keep in mind that the URLs will remain in Google’s index, so you will need to take additional action to remove them.

6. Remove Google's cached copies of the content by going through the steps described in the Remove cached URLs section.

7. Use the noindex robots directive, either by implementing it through HTML source or through the X-Robots-Tag HTTP header.

8. Create an XML sitemap with the noindexed URLs so Google can easily discover them and process the noindex robots directive.

9. Once you’re confident Google has deindexed the staging URLs, you can remove the XML sitemap and add HTTP authentication to protect your staging environment , thereby preventing this from happening again.

If you want to remove your URLs from Microsoft Bing, you can do that through its Content Removal Tool.

When staging URLs are outranking production URLs

If your staging URLs are outranking your production URLs, you need to ensure Google assigns the staging URLs’ signals to the production URLs, while at the same time also ensuring that visitors don’t end up on the staging URLs.

- Go through steps 1-6 as discussed in the previous section .

- Then, implement 301 redirects from the staging URLs to the production URLs.

- Set up a new staging environment on a different (sub)domain than the one that got indexed, and be sure to apply HTTP authentication to it to prevent it from being indexed again.

What to avoid when dealing with indexed staging URLs

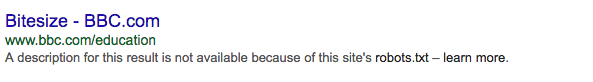

If you want to remove staging environment URLs from Google, never try to do so by using a Disallow: / in your robots.txt file.

That would stop Google from accessing the staging URLs, preventing them from finding out about the noindex robots tag! Google will keep surfacing the staging URLs, just with a very poor snippet like this:

When deploying website changes to live, talk to your developers about making sure the process is 100% bulletproof. There are some “SEO bits” that could easily harm your website’s progress if not managed correctly.

These involve:

* Robots.txt file.

* Web server config files like .htaccess, nginx.conf or web.config.

* Files you use for the meta tag deployment process (to protect your staging environment from getting indexed and live website from getting de-indexed).

* JS files which are involved in content and DOM rendering.

I’ve seen healthy websites drop in Google’s SERPs just because, during the deployment to live process, the robots.txt file was overwritten by the staging version with Disallow: / directive or the other way around: the indexing flood gates were opened because important directives were removed.

How to remove spam URLs

If your website has been hacked, and it contains a ton of spam URLs, you should get rid of them as quickly as possible so that they don’t (further) hurt your SEO performance, and your trustworthiness in the eyes of your visitors.

Follow the steps below to quickly reverse the damage.

Step 1: Use Google Search Console's Removals Tool

Google’s Removals Tool helps you quickly remove spammy pages from Google SERPs. And again, keep in mind that this tool doesn’t deindex the pages – it only temporarily hides them.

How to remove URLs using GSC’s Removals tool

- Sign in to your Google Search Console account.

- Select the right property.

- Click the

Removalsbutton in the right-column menu.

4. Click the NEW REQUEST button, and you'll land on the TEMPORARILY REMOVE URL tab:

5. Choose Remove this URL only, enter the URL you want to remove and hit the Next button. Google will now keep the URL hidden for 180 days, but keep in mind that the URLs will remain in Google’s index, so you will to take additional action to hide them.

6. Repeat as much as you need to. If you're dealing with a large amount of spam pages, we recommend focusing on hiding the ones that are surfacing most often in Google. Use the Remove all URLs with this prefix option with caution, as it may hide all URLs (potentially thousands) that match the prefix you’ve entered in the Enter URL field.

7. Also remove Google's cached copies of the spam URLs by going through the steps described in the Remove cached URLs section.

Step 2: Remove the spam URLs and serve a 410

Restore your website’s previous state by restoring a backup. Run updates, and then add additional security measures to ensure your site isn’t vulnerable anymore. Then check whether all the spam URLs are gone from your website. It’s best to return a 410 HTTP status code when they are requested, to make it abundantly clear that these URLs are gone and will never return.

Step 3: Create an additional XML sitemap

Include the spam URLs in a separate XML sitemap and submit it to Google Search Console. This way, Google can quickly “eat through” the spam URLs, and you can easily monitor the removal process via Google Search Console.

What to avoid when dealing with spam URLs

Similarly as with a staging environment, avoid adding aDisallow: / to your robots.txt file, as that would prevent Google from recrawling the URLs. Google needs to be able to notice that the spam URLs have been removed.

How to remove URLs with sensitive content

If you collect sensitive data, such as customer details or resumes from job applicants, on your website it’s vital to keep them safe. Under no circumstances should this data get indexed by Google – or any other search engineSearch Engine

A search engine is a website through which users can search internet content.

Learn more for that matter.

However, mistakes are made, and sensitive content can find its way into Google’s search results. No sweat though: we’ll explain how to get this content removed from Google quickly.

Step 1: Use Google Search Console URL’s Removal Tool

Hiding URLs with sensitive content through the GSC’s removal tool is the fastest way to get Google to stop showing them in its SERPs. However, keep in mind that the tool merely hides the submitted pages for 180 days; it doesn’t remove them from Google's index.

How to hide URLs using GSC Removals tool

- Sign in to your Google Search Console account.

- Select the right property.

- Click the

Removalsbutton in the right-column menu.

4. Click the NEW REQUEST button, and you'll land on the TEMPORARILY REMOVE URL tab:

5. Choose Remove this URL only, enter the URL you want to remove and hit the Next button. Google will now keep the URL hidden for 180 days, but keep in mind that the URLs will remain in Google’s index, so you will to take additional action to hide them, as outlined in the following steps below.

6. Repeat as much as you need to. If the sensitive content is located in a specific directory, we recommend using the Remove all URLs with this prefix option as that'll allow you to hide all URLs within that directyory in one go. If you're dealing with a large amount of URLs, that don't have a shared URL prefix, containing sensitive content, we recommend focusing on hiding the ones that are surfacing most often in Google.

7. Also remove Google's cached copies of the sensitive content by going through the steps described in the Remove cached URLs section.

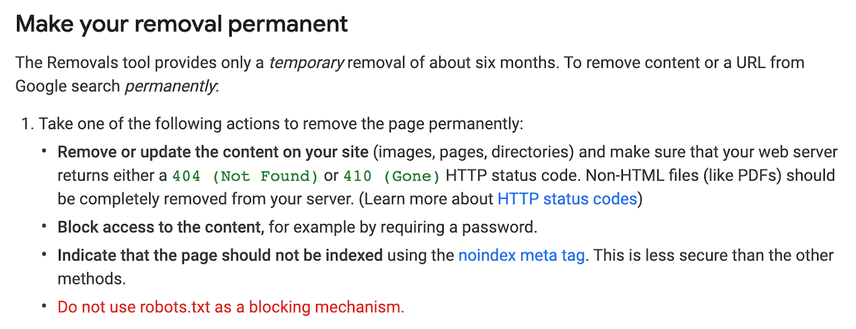

Step 2: Remove the content and serve a 410

If you don’t need to have the sensitive content on your website anymore, you can delete the URLs and return the 410 HTTP status code. That tells Google the URLs have been permanently removed.

Step 3: Use an additional XML sitemap

To control and monitor the process of removing URLs with sensitive content, add them to a separate XML sitemap, and submit it in Google Search Console.

Step 4: Prevent sensitive-data leaks from happening

To prevent sensitive content from getting indexed and leaked again, take appropriate security measures to keep this from happening.

Don’t forget about your non-HTML files!

If you apply a noindex tag to your pages, Google can sometimes still find assets and attachments that we don't want to be discoverable, such as PDFs and images - to make sure these are not found, you’ll need to use the x-robots noindex tag instead. However, there is a challenge with using robots headers which is testing and monitoring for them. Thankfully ContentKing makes this easy!

How to remove content that's not on your site

If you're finding that other websites are using your content, here are several ways to remove it from Google.

Reach out to the website owner

The first thing you should do is to reach out to the people running the website. In a lot of these cases, "the intern" mistakenkly copied your content and they'll take swift action. You can offer them to point a cross-domain canonical to your content along with a link, ask them to 301 redirect it to your own URL or to just remove it altogether.

What if the website’s owners aren’t responding or refuse to take any action?

If the website’s owners aren’t cooperative, you have a few ways to ask Google to remove it:

- For removal of personal information , you can use this form .

- For legal violations, you can ask Google to evaluate a removal request filed under applicable law.

- If you have found content violating your copyright, you can submit a DMCA takedown request .

If pages have been deleted on another site and Google hasn't caught up yet, you can expedite the removal process by using the Remove outdated content tool .

You can also use it when content has already been updated, but Google's still showing the old snippet and cache. It'll force them to update it.

How to remove images from Google Search

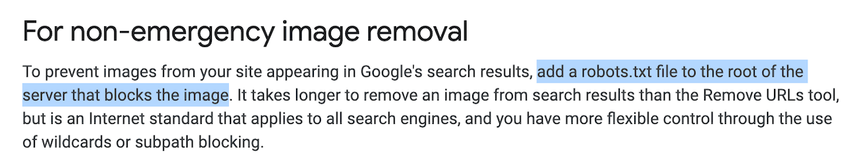

While it's not recommended to use the robots.txt file to remove indexed pages from Google Search, Google does recommend using it to remove indexed images.

We know this sounds confusing, and thanks for Vicky Mills for bringing this up!

Google's documentation isn't very clear on this, because if you look at the Removals Tool documentation you'll find in the section where they're also talking about both HTML and non-HTML files the line Do not use robots.txt as a blocking mechanism.:

At the same time, their "Prevent images on your page from appearing in search results" article says:

So, how do you go about removing these images?

Say some images in the folder /images/secret/ have been accidentally indexed. Here's how to remove them:

How to remove images from Google Search

- Go through steps 1-6 in the section above to quickly hide the URLs in Google Search.

- Then, add these lines to your robots.txt:

User-agent: Googlebot-Image

Disallow: /images/secret/

The next time Googlebot downloads your robots.txt, they'll see the Disallow directive for the images and remove the images from its index.

It is not possible to have a noindex meta tag on an image. We could use the X-Robots response header to specify noindex, however Google recommends instead that we rely on the Removals tool or block the problematic image URL with robots.txt.

Luckily, this is the one time a disallow in robots.txt will work to remove URLs from the index - and is recommended by Google for non-emergency image removal.

We can exclude the images from just Google Image Search by specifying the user agent Googlebot-Image, or from all Google searches by specifying Googlebot.

Conclusion

There are plenty of situations in which you'll want to remove URLs from Google quickly.

Keep in mind there's no "one size fits all" approach to this, as each situation requires a different approach. And if you're been reading between the lines, you'll have noticed that most of the situations in which you need to remove URLs can actually be prevented.

Forewarned is forearmed!

![Tomas Ignatavičius, SEO Lead, [object Object]](https://cdn.sanity.io/images/tkl0o0xu/production/f0b469bb6ec744adf9208167df8745f1ef50e50b-400x400.jpg?fit=min&w=100&h=100&dpr=1&q=95)

![Sophie Gibson, Technical SEO Director, [object Object]](https://cdn.sanity.io/images/tkl0o0xu/production/edb5f46855ebe42ef4b7553dc94dfafd03430035-400x400.jpg?fit=min&w=100&h=100&dpr=1&q=95)